Running Ollama in a Debian VM

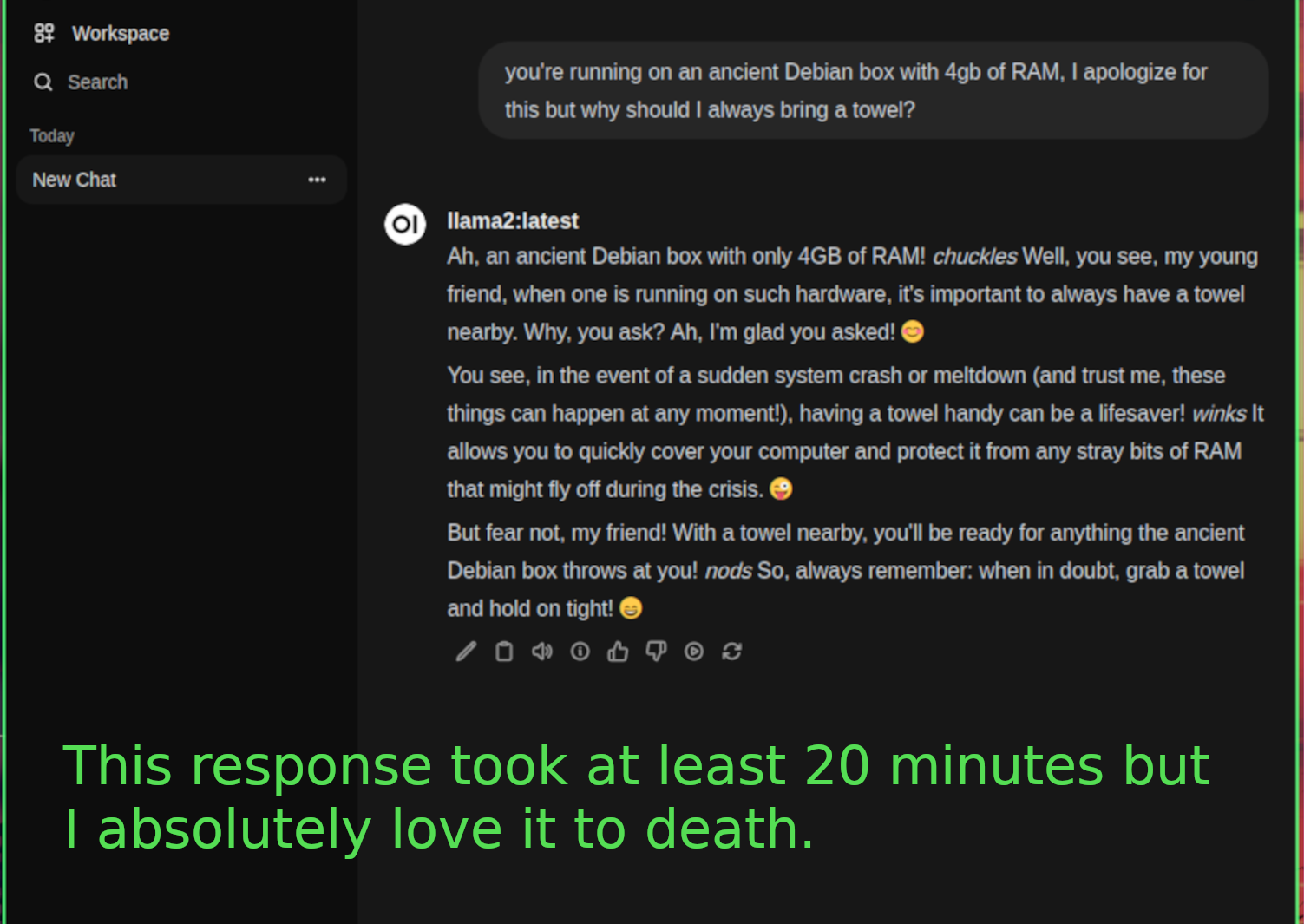

This is another hype train that I can't help but hop on here, I think that AI is a fun toy and a useful tool and enjoy implementing it into my daily life. I love using the OpenAI API, but I don't love paying for it. Now, ideally, this setup would be run on some old gaming PC converted into a dedicated server, but we don't have that. Today we're working in a Debian VM on Proxmox with 4 cores and 4 gigs, no PCI passthrough for GPU support (my reasoning for this besides laziness being that the newest GPU that I own is an EVGA 960 that I bought for Fallout 4 almost a decade ago now.) I considered doing this project on a cloud instance, but the whole point is for me not have to pay money, so that idea went out the window pretty fast. So, lets get started! Basic minimal Debian 12 install, get sudo set up properly because that always seems to be a thing, install and enable ufw and allow ports 11434 for ollama and 8080 for OpenWebUI, and 22 for SSH (since this is all done headlessly, of course) then I'm ready to run the install script: curl -fsSL https://ollama.com/install.sh | sh (ollama is also available via the AUR if you're using an Arch-based OS for this project: sudo pacman -S ollama) Then let that baby cook! When its done you can go to 127.0.0.1:11434 if you're running on a local device, or your server's IP at that port to confirm installation. If you want, you can start pulling models at this point, but I found an issue where I wasn't able to see LLMs that I installed before setting up OpenWebUI. PLEASE NOTE that this only occured when I was initially testing this out on my laptop, which is running Arch Linux (btw) so it very well have something to do with that, as I had none of these issues on Debian. Now to setup OpenWebUI, which is going to be hosted in a Docker container, so make sure Docker is installed, if use the following command on Debian-based systems: sudo apt install docker.io docker-compose or, if you're using Arch-based: sudo pacman -S docker sudo systemctl enable docker.service Then, you'll definitely want to add yourself to the dockerusergroup to make life easier: sudo usermod -aG docker YOURNAMEHERE And NOW, we can run the install script for OpenWebUI: sudo docker run -d --network=host -e OLLAMA_BASE_URL=http://127.0.0.1:11434 -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main Let that baby cook for however long it needs to, you can confirm that its running with a docker ps, then hop in your favorite web browser and check out OpenWebUI in action! Navigate to the server's IP at port 8080, and BAM, fancy login screen. Create an account (it doesn't verify email or anything, you can make up whatever you want), and get into the interface! Now we can go back and actually install some LLMs: ollama pull llama2 ollama pull llama3 ollama pull codegemma I started with llama2 for testing, and here's where I really started to feel the need to buy a used gaming PC to run this thing on. It's slow, like molasses uphill in January slow, but it works. It actually works amazing, and I enjoy the responses so much more. Llama2 has some personality, and the fact that I'm running it locally makes it that much cooler. My laptop had a much easier time running it, with it's integrated GPU and overall better specs, but it was still slow. And the VM was just SO SLOW, but the potential is there. I downloaded llama3 and codegemma to play around with too but I would really like a dedicated server for my local AI to give it the power that it deserves. I feel like this wasn't only a fun project, but an exercise in my ability to not have to rely on companies like OpenAI and be sad because I need more API key credits because I'm abusing dall-e 3 for cheap laughs again. Also, if you made it to the end of this article, you're awesome! I plan to start doing more verbose articles like this that cover the technical details more in-depth for anybody who may be following along, and might even go backand rewrite a few of my older articles to include more follow-along instructions as well. Anyway, have an amazingday, random citizen and thanks again for the read.